Overview of the network #

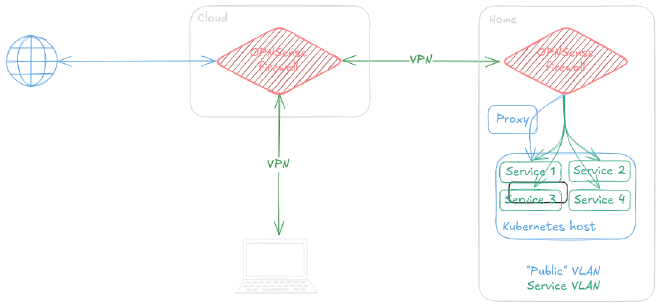

Most of the network is hosted at home. To access it remotely, I use a VPN (WireGuard). Over the years, this homelab has moved through several homes — some of which didn’t allow me to connect directly — so I set up a cloud firewall that acts as a gateway to the network.

The goal was to configure the Kubernetes network subnet to integrate with my homelab, set up routing so the OPNSense router at home could route this subnet, and then share those routes with the OPNSense router in the cloud. This allows me to access services over VPN or even expose them to internet.

In the end, the cluster uses Calico for internal traffic, MetalLB for load balancing, and exposes routes using iBGP to the first OPNSense router. The two OPNSense routers exchange routes using OSPF/OSPFv3, but I won’t cover that part here.

Yes, this is overkill — and probably not the most efficient setup — but it’s a fun way to learn and tinker.

Kubernetes setup #

I won’t go into detail about setting up the Kubernetes cluster itself, as I started from an existing setup. For most of my projects, I use k3s.

Calico setup #

Following the documentation we can install Calico like this:

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.1/manifests/tigera-operator.yaml

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.1/manifests/custom-resources.yaml

Or you can customize it to your needs. In my case, I disabled BGP export as I didn’t want pods to be routable externally:

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

calicoNetwork:

ipPools:

- name: default-ipv4-ippool

blockSize: 26

cidr: 192.168.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

disableBgpExport: true

---

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

Next we configure BGP:

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: bgp-config-name

spec:

asNumber: <CLUSTER_ASN>

nodeToNodeMeshEnabled: true

serviceLoadBalancerIPs:

- cidr: <SERVICE_SUBNET>

---

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: bgp-peer-name

spec:

peerIP: <ROUTER_1_ADDRESS>

asNumber: <ROUTER_ASN>

keepOriginalNextHop: true

maxRestartTime: 15m

Replace the ASNs with a value between 64512 and 65534 (or with your own ASN if you have one).

Also replace <SERVICE_SUBNET> with the desired subnet, e.g. 10.42.42.0/24.

MetalLB setup #

Installing MetalLB is pretty straightforward. I used the Helm chart from bitnami to install the controller and disabled the speaker since Calico handles that part (see this):

helm install metallb --set speaker.enabled=false oci://registry-1.docker.io/bitnamicharts/metallb

Then I created an IP pool:

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: metallb-ippool-name

spec:

addresses:

- <SERVICE_SUBNET>

OPNSense setup #

BGP Configuration #

In Routing > BGP, add your Kubernetes cluster as a neighbor.

- Set a nice

Description. - Use your Kubernetes node IP as the

Peer IP. For multi-node setups, add a neighbor for each. - Set the

Remote ASto your<CLUSTER_ASN>. - Choose the correct

Update Source Interface(the one connected to the cluster). - Check

Next-Hop-Self. This is to ensure traffic will flow through this router.

You can optionally enable BFD for quicker failure recovery, and use Route Maps to filter traffic. (I also participate in dn42, so I keep my BGP routes clean and isolated).

Bonus: DNS #

This setup is great — I can access services from my network and remotely — but I still need to know each service’s IP. Since these can change after a reboot, I wanted DNS resolution for load-balanced services.

To achieve that, I used the CoreDNS service bundled with k3s. Here’s how to expose it via MetalLB with a static IP and configure it to serve your domain:

apiVersion: v1

kind: Service

metadata:

name: coredns-udp

namespace: kube-system

annotations:

metallb.universe.tf/allow-shared-ip: "DNS"

spec:

type: LoadBalancer

loadBalancerIP: <STATIC_IP_FOR_COREDNS>

selector:

k8s-app: kube-dns

ports:

- port: 53

targetPort: 53

protocol: UDP

---

apiVersion: v1

kind: Service

metadata:

name: coredns-tcp

namespace: kube-system

annotations:

metallb.universe.tf/allow-shared-ip: "DNS"

spec:

type: LoadBalancer

loadBalancerIP: <STATIC_IP_FOR_COREDNS>

selector:

k8s-app: kube-dns

ports:

- port: 53

targetPort: 53

protocol: TCP

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns-custom

namespace: kube-system

data:

external.server: |

<BASE_DOMAIN_OF_YOUR_SERVICES>:53 {

kubernetes

k8s_external <BASE_DOMAIN_OF_YOUR_SERVICES>

}

Finally, in OPNSense, create a Query Forwading rule in Unbound for your domain <BASE_DOMAIN_OF_YOUR_SERVICES> pointing to <STATIC_IP_FOR_COREDNS>.

Congrats, your services are now accessible via: <SERVICE_NAME>.<SERVICE_NAMESPACE>.<BASE_DOMAIN_OF_YOUR_SERVICES>!